OpenAI's Whisper invents parts of transcriptions — a lot

Imagine going to the doctor, telling them exactly how you're feeling and then a transcription later adds false information and alters your story. That could be the case in medical centers that use Whisper, OpenAI's transcription tool. Over a dozen developers, software engineers and academic researchers have found evidence that Whisper creates hallucinations — invented text — that includes made up medications, racial commentary and violent remarks, ABC News reports. Yet, in the last month, open-source AI platform HuggingFace saw 4.2 million downloads of Whisper's latest version. The tool is also built into Oracle and Microsoft's cloud computing platforms, along with some versions of ChatGPT. The harmful evidence is quite extensive, with experts finding significant faults with Whisper across the board. Take a University of Michigan researcher who found invented text in eight out of ten audio transcriptions of public meetings. In another study, computer scientists found 187 hallucinations while analyzing over 13,000 audio recordings. The trend continues: A machine learning engineer found them in about half of 100 hours-plus worth of transcriptions, while a developer spotted hallucinations in almost all of the 26,000 transcriptions he had Whisper create. The potential danger becomes even clearer when looking at specific examples of these hallucinations. Two professors, Allison Koenecke and Mona Sloane of Cornell University and the University of Virginia, respectively, looked at clips from a research repository called TalkBank. The pair found that nearly 40 percent of the hallucinations had the potential to be misinterpreted or misrepresented. In one case, Whisper invented that three people discussed were Black. In another, Whisper changed "He, the boy, was going to, I’m not sure exactly, take the umbrella." to "He took a big piece of a cross, a teeny, small piece ... I’m sure he didn’t have a terror knife so he killed a number of people." Whisper's hallucinations also have risky medical implications. A company called Nabla utilizes Whisper for its medical transcription tool, used by over 30,000 clinicians and 40 health systems — so far transcribing an estimated seven million visits. Though the company is aware of the issue and claims to be addressing it, there is currently no way to check the validity of the transcripts. The tool erases all audio for "data safety reasons," according to Nabla’s chief technology officer Martin Raison. The company also claims that providers must quickly edit and approve the transcriptions (with all the extra time doctors have?), but that this system may change. Meanwhile, no one else can confirm the transcriptions are accurate because of privacy laws. This article originally appeared on Engadget at https://www.engadget.com/ai/openais-whisper-invents-parts-of-transcriptions--a-lot-120039028.html?src=rss

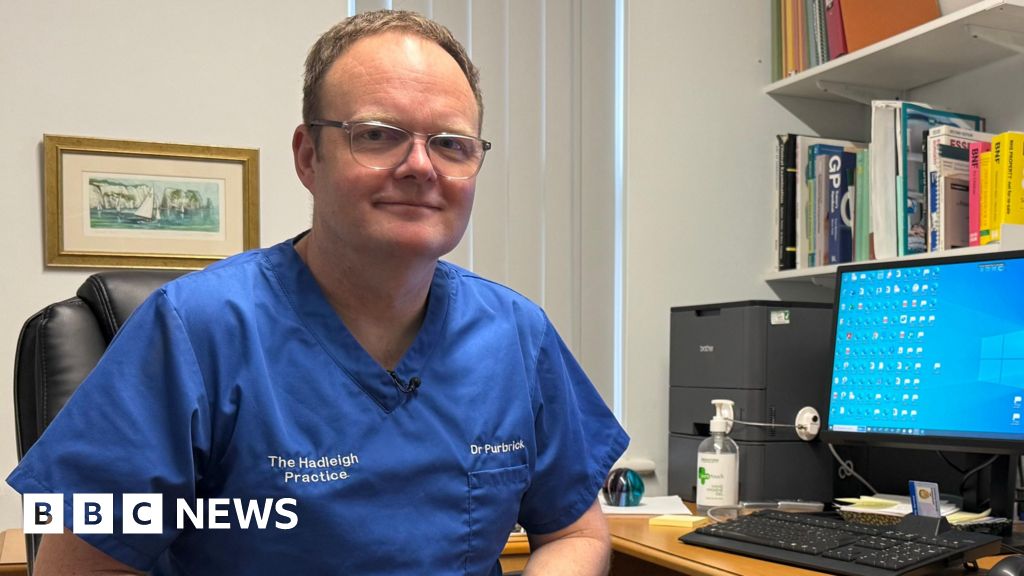

Imagine going to the doctor, telling them exactly how you're feeling and then a transcription later adds false information and alters your story. That could be the case in medical centers that use Whisper, OpenAI's transcription tool. Over a dozen developers, software engineers and academic researchers have found evidence that Whisper creates hallucinations — invented text — that includes made up medications, racial commentary and violent remarks, ABC News reports. Yet, in the last month, open-source AI platform HuggingFace saw 4.2 million downloads of Whisper's latest version. The tool is also built into Oracle and Microsoft's cloud computing platforms, along with some versions of ChatGPT.

The harmful evidence is quite extensive, with experts finding significant faults with Whisper across the board. Take a University of Michigan researcher who found invented text in eight out of ten audio transcriptions of public meetings. In another study, computer scientists found 187 hallucinations while analyzing over 13,000 audio recordings. The trend continues: A machine learning engineer found them in about half of 100 hours-plus worth of transcriptions, while a developer spotted hallucinations in almost all of the 26,000 transcriptions he had Whisper create.

The potential danger becomes even clearer when looking at specific examples of these hallucinations. Two professors, Allison Koenecke and Mona Sloane of Cornell University and the University of Virginia, respectively, looked at clips from a research repository called TalkBank. The pair found that nearly 40 percent of the hallucinations had the potential to be misinterpreted or misrepresented. In one case, Whisper invented that three people discussed were Black. In another, Whisper changed "He, the boy, was going to, I’m not sure exactly, take the umbrella." to "He took a big piece of a cross, a teeny, small piece ... I’m sure he didn’t have a terror knife so he killed a number of people."

Whisper's hallucinations also have risky medical implications. A company called Nabla utilizes Whisper for its medical transcription tool, used by over 30,000 clinicians and 40 health systems — so far transcribing an estimated seven million visits. Though the company is aware of the issue and claims to be addressing it, there is currently no way to check the validity of the transcripts. The tool erases all audio for "data safety reasons," according to Nabla’s chief technology officer Martin Raison. The company also claims that providers must quickly edit and approve the transcriptions (with all the extra time doctors have?), but that this system may change. Meanwhile, no one else can confirm the transcriptions are accurate because of privacy laws. This article originally appeared on Engadget at https://www.engadget.com/ai/openais-whisper-invents-parts-of-transcriptions--a-lot-120039028.html?src=rss

What's Your Reaction?